There’s hardly a tech entrepreneur who has never heard of microservices, an emerging style of application development. However, with all the tech disruption, most concepts don’t go beyond a buzzword. So microservices architecture can ring as yet another overhyped idea to most of us. Okay, Netflix, Amazon, eBay have migrated to microservices. So what? What with all the complexity of the MSA? What on earth can beat the good old monolith?

Life can. Business environment is all about disruption and digitization, smarter business models, faster way to market, a battle of UX’s, cloud-native & serverless all, ambient computing, and information security. New business reality asks for more flexible, resilient, fault-tolerant, device-agnostic solutions, where complexity is an intrinsic hallmark of a system excellence.

And the first step to excellence is awareness.

In this post we’ll make a brief introduction to microservices architecture (MSA): its essence, good and bad, whether you need it, and how to implement it.

Use the plan to jump to a question of your choice:

- What is microservices architecture

- The good and the bad of microservices

- When does your product need microservices

- Microservices implementation: virtualization, containerization, dockerization

- Should I go for virtualization or containerization?

- Docker and alternatives in container technology

- What do container orchestration tools do

What is microservices architecture

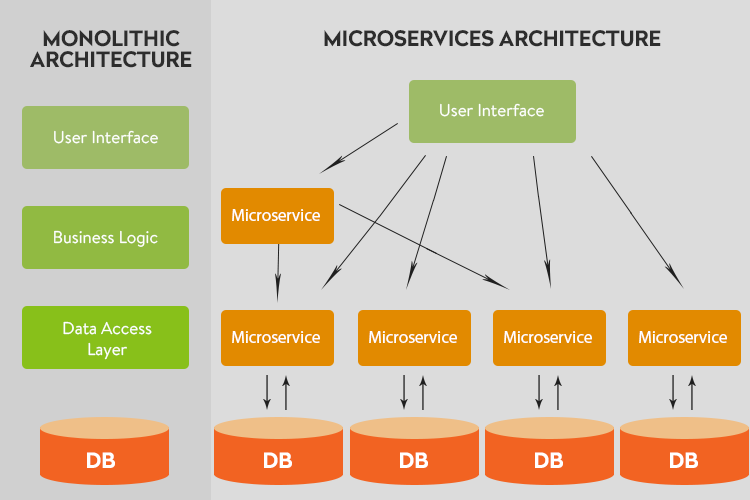

Not all software systems are made the same. In fact, there’s a full spectrum – from ‘monoliths’ (giant one-piece bundles of code) to ‘microservices’ (tiny single-function apps communicating with each other).

Microservices architecture focuses on creating software as single-function components (microservices) with well-defined interfaces and operations that communicate via APIs.

So, in close-up, the two systems will look like this:

But microservices architecture isn’t just mere unbundling of a monolith. What counts is how various functionalities are identified as business capabilities and then split as fully independent, fine-grained, and self-contained microservices. So this software development architecture has certain distinctive features making it more desirable for some business cases than others.

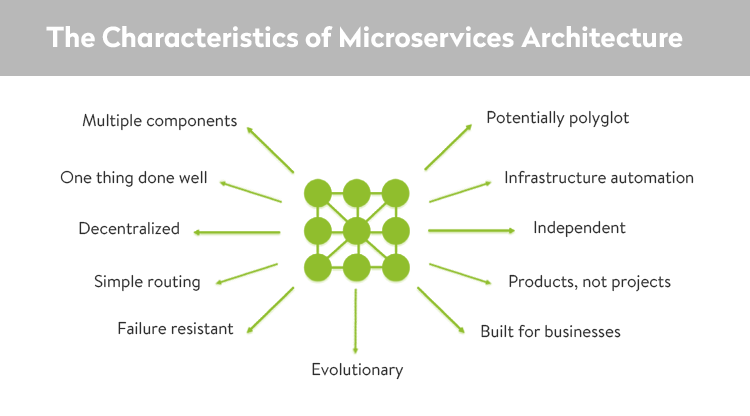

The standards of microservices architecture

An application designed as microservices will most certainly have some, if not all, of these characteristics, that may well count as the standards of MSA.

Let’s go on to explain what those features entail.

Multiple components

An app is a suite of components communicating via APIs. That allows to develop, tweak, scale, and deploy them faster and without threatening the integrity of an entire application.

One thing done well

Each component focuses on a single specific function. A rule of thumb reads ‘as small as possible, but as big as necessary’ to represent the domain concept they own. If the code gets too complicated over the time, a component can be further split.

Decentralized

A product is designed as distributed systems with decentralized data management. In a microservices application, each service usually manages its unique database. It also favors distributed governance that allows others to benefit from the app.

Simple routing

Microservices utilize the principle of smart endpoints and dumb pipes. It runs similarly to the classical UNIX system. All the business logic is located within the services, leaving the communication between the nodes as lightweight as possible.

Failure resistant

No service is immune to failure. Microservices, however, are ‘designed for failure’. That means a single service failure doesn’t lead to the entire system shutdown. Other services (replicas) will take over just-in-time, provided there’s a valid monitoring system.

Evolutionary

This sort of architecture suits long-term systems that evolve so you can’t anticipate the devices communicating with the app. A monolith can evolve into microservices, too, if unforeseen requirements urge adding components that interact with the older system via APIs.

Potentially polyglot

Implementation details of one service should not matter to another. The services are decoupled from one another and built autonomously with the language, tools, persistence store, and methodology a team finds appropriate.

Infrastructure automation

Build and deployment automation is mandatory when working with microservices. When we have dozens of services running as independent processes to come together as a single application, manual deployment is bound to produce failures.

Independent

Each component is built, deployed, modified, or scaled independently and on demand. This enables multiple teams to work independently. Isolatability, as well as single-function, less dependable, readable code makes it faster to build, easier to test, deploy, rewire, scale.

Products, not projects

Instead of engaging in projects (the traditional way), cross-functional teams build products of individual services that communicate via message bus.

Built for businesses

The principal focus in the architecture is business capabilities and priorities rather than technology or various app layers. The principle ‘you build it, you run it’, aka DevOps, makes the teams build and maintain the product throughout its lifecycle.

Read also: DevOps as a Service or Do You Really Need a DevOps Team?

The good and the bad of microservices

Every innovation comes with initial challenges. Stepping out of the comfort zone is inevitable. The adoption of a new method or technology entails a learning curve and a rump-up. But the long-term merits make it worth the efforts.

The merits of microservices

The style keeps your business abreast of time by ensuring:

- Scalability, in-built in the app design. The ability to add more instances (not hardware) on demand – horizontal scaling – provides top performance cost-effectively. Add to that better capacity elasticity with microservices.

- Speed of independent testing, deployment, scaling, rewiring due to single-function clean code with few dependencies.

- Quality of the code similar to OOP: improved reusability, composability, and maintainability. It’s modularity is forced by the architecture.

- Reusability of services across a business reduces the building costs.

- Innovation in methodology, use of tools and technology, and more responsible attitude to a product’s lifecycle management.

- Flexibility as a consequence of avoiding locking in technologies or languages. Changes on the fly with resources available.

The challenges of microservices

Martin Fowler hints on the ultimate challenge of MSA, ‘The first rule of distributed objects: don’t distribute them‘. Besides the fallacies of distributed systems, you’ll have to embrace the architectural and operational complexities that come with the territory. The major concerns are related to these four areas:

- Communication between services. Distributed systems, which microservices effectively are, face regular challenges with latency, bandwidth, and network reliability. Communication protocols should be lightweight as possible and certain new practices have to be adopted (we’ll specify shortly).

- Migration from a monolith to microservices will require a gruesome unbundling of the dependencies down to the database layer.

- Versions are tricky to control. More parts requires more control over versioning. Container sprawl will require certain best practices, such as routing-based versioning at the API level.

- Organization of the process. Adopting a new product development methodology has to acquire both hard and soft skills and transit to the culture of DevOps.

So MSA is not for all. And there are surely certain stages of readiness for a company to adopt the new culture of DevOps.

Read also: DevOps vs Agile: Comparing the Incomparable.

When does your product need microservices

The views on that vary. On the one hand, big players and several industry movements have been building awareness and pushing for microservices architecture adoption based on a host of use cases:

- Financial Services

- Insurance Services

- IoT Solutions

- Video streaming solutions, like Netflix

- Social media solutions, like Twitter

- Logistic solutions, like Uber

- E-commerce websites, like eBay

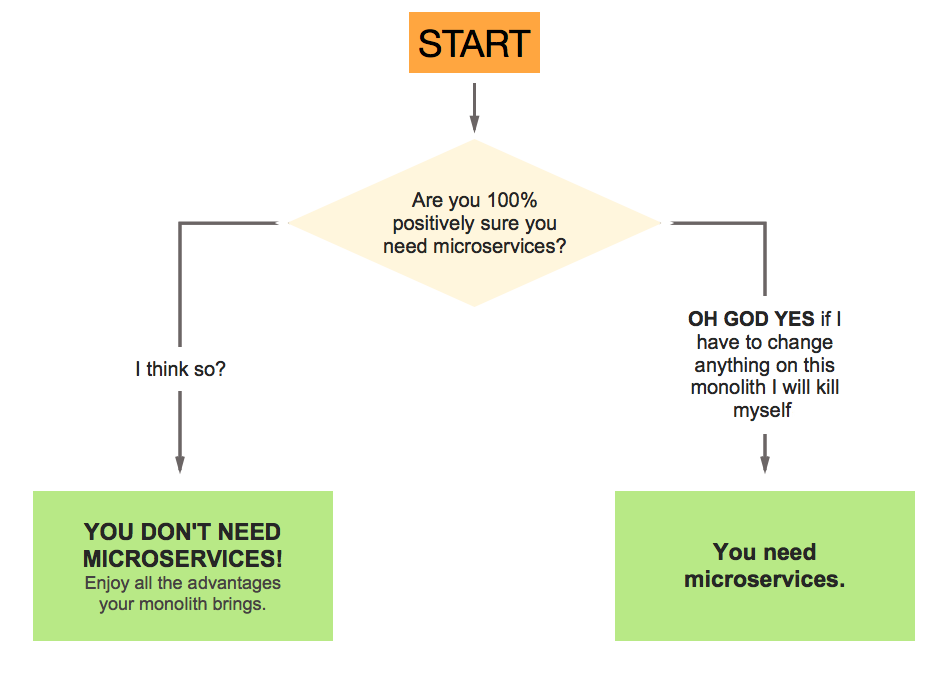

On the other, there’s a tongue-in-cheek restrictive algo for going MSA:

Image courtesy of Stavros Korokithakis

The truth is always in-between.

If horizontal scaling is needed

Everyone agrees, the primary reason for using microservices architecture is when an app needs to scale horizontally, which is where nothing compares to microservices.

Horizontal scaling done via microservices is cost-efficient and performant due to the added elasticity of containerization. Different parts of your app might have various scalability needs. So adding instances of a particular microservice (without scaling an entire app) will eliminate bottlenecks while using resources sparingly.

If your app is large

As regards the project size, large projects will benefit from scalability and independent deployments, as it will give them flexibility and a lot of leeway in adding processing power to their stack for serving increased workloads. Small projects will have less overhead with a Monolith-first strategy. Once you realize microservices are worthwhile, decompose or sacrifice your initial monolith. There’s a rule of thumb, however: you should not attempt microservices unless you know the domain really well.

If reusability is important

The same app can be reused, and modular architecture allows to repurpose the code easily. If you write applications that need to be integrated with third-party apps or configured with different functionality for different customers, it’s faster and easier to use prefabricated code blocks. You’ll only add some on-demand custom features rather than develop the entire app from scratch. Of course, microservices aren’t the only solution here, but they force the modular development around business capabilities.

If innovation & speed are vital

Innovative models – whether business or technology – can make all the difference to your market standing. Faster operations, more precision, more talented engineers all reside in the environments where decision making belongs to teams and domain experts, not the powerful few. Fiefdoms are not at all a bad thing after all. And a product owner still owns an excellent product even if s/he can’t look under the hood for the intellectual property reasons. It’s a matter of priority. How is innovation important to your business? And how do you go about it? Building a new runtime and compiler because PHP is not fast enough, like FB did? Or using a 3rd-party service since you won’t make it fast to the market with a team of 8?

But not with old & ugly code

Not all applications are fit for microservices and containerization. We wouldn’t recommend it for:

- Mainframe-based apps written in old languages, like Fortran or COBOL, or proprietary ones and using old databases. Either build them anew from scratch or wait for the next-gen tools to handle the process.

- Poorly designed apps need refactoring prior to containerization. Microservices follow a strict set of patterns.

- Apps that are hard to segregate from their datastore are tough for immediate containerization. Decoupling the data from the application level can be a pain in the neck.

Microservices implementation: virtualization, containerization, dockerization

Making sense of vocabulary is the first step to meeting your needs. Now that we’ve grasped the concept of microservices, the core question is what execution environment they should run in. There are several runtime options – a physical server, a virtual server, or a container.

Since on-premise approach is incredibly wasteful today, software is predominantly run on virtual machines – virtualized. Or ‘packaged’ in containers (containerized) to make it portable, dynamically configured, and easily orchestrated. And the entire code and dependencies can be fit into a neat little package – an image – by means of Docker containers (dockerized).

So how do the three terms correlate?

Virtualization: for hardware

Virtualization creates a virtual representation of an app, server, storage device, network or even an operating system where the framework divides the resource into one or more execution environments.

Containerization: for operating system

Containerization is an OS-level virtualization. It can run multiple workloads with a single OS installation, further sparing the precious server memory. Unlike virtual machines mimicking an entire server, containers are lightweight and run on a shared host OS. When it comes to resource consumption, containers are like apartments while VM’s are like entire detached houses (love this ‘housing’ analogy by Mike Coleman).

Dockerization: containerization via Docker platform

Docker is the most widely used containerization technology. Because of the popularity of Docker containers, the term dockerization has grown synonymous with containerization (implying the use of Docker technology).

Should I go for virtualization or containerization?

A microservice may run in a container, but it could also run as a fully provisioned VM. You can run microservices without containers, but you’d better not for a number of reasons. Or there can be a need for multiple OS’s, in which case you’ll have to run containers inside VM’s. The choice depends on many variables.

To begin with, what are your priorities? If security is vital, virtualization may work better. As Daniel J Walsh puts it, ‘containers do not contain’. Opting for Docker will require special expertise.

Another factor is the character of application. VM’s are not suitable for real-time apps as boot-up process takes minutes, compared to the seconds with containers. With most stateful apps, the ones that are not scaled frequently, it’s best to go for a virtual machine, where the necessary scalability is pretty well defined. Containerization best fits modular apps, stateless, designed for scaling, e.g. mobile apps, real-time data apps, etc. So the control plane for those apps has to be designed to be distributed and easily scalable.

The more functionality you need to containerize, the more you should be biased towards using a VM. Remember, a container is for a single-function app.

Containers are preferred for the reasons of resource economy. A VM is heavy in resource consumption. It needs a full copy of the OS and all the hardware to run, which adds up to a lot of RAM and CPU cycles. You can also develop and test more effectively by mirroring production environments. Creating a portable and consistent environment for dev, test and delivery is where containers are really disruptive.

On the whole, this table may guide you in your choice:

| Use Containers if you need… | Use Virtual Machines if you need… |

|---|---|

|

|

Docker and alternatives in container technology

Docker container fulfils the need to have something as versatile as a VM, but lighter in terms of storage, abstracted enough to be run anywhere, and independent of the language used for development.

But the existence of alternatives doesn’t mean container technology is fragmented with incompatible container formats on the market. In fact, the Open Container Initiative (OCI) has done its best to standardize the technology so anyone can take the advantage. So alternatives are mostly the tools and software to support the standardized technology. All the major players collectively invested in the initiative. The project’s sponsors include AWS, Google, IBM, HP, Microsoft, VMware, Red Hat, Oracle, Twitter, and HP, as well as Docker and CoreOS.

So besides Docker, other container providers are OCID, CoreOS’ Rocket (rkt), Canonical’s LXC and LXD, and OpenVZ. The engines come with supporting tools, for example the Docker Toolbox, which simplifies the setup of Docker for developers, and the Docker Trusted Registry for image management.

What do container orchestration tools do

The engines like Docker provide basic support for defining simple multi-container applications, for example Docker Compose. However, full orchestration involves scheduling of how and when containers should run, cluster management and the provision of extra resources, often across multiple hosts. Container orchestration tools include specific ones – Docker Swarm, Google Kubernetes, Apache Mesos, Amazon ECS (to name a few) – as well as general-purpose ones – Chef, Puppet, or Ansible, all open-source. The tools help contain the ‘chaos’ of microservices by running the procedures below.

Clustering

Now that you have tons of tiny batches of code, how can you manage them? Clustering lets you share resources, schedule tasks, and treat many running processes as one unified, scalable, and well-behaved solution across all workloads. Cluster manager keeps track of the resources (memory, CPU, and storage). Of course, you use tools to set up this process: Docker Swarm, Kubernetes, etcd, Techtonic, or AWS ECS.

Messaging

To be operational, microservices have to ‘communicate’. We mentioned the ‘smart endpoints and dumb pipes’ principle to keep messaging protocols as lightweight as possible. But how to implement this?

- HTTP APIs. HTTP is a standard communications protocol supported by any language. There are some useful libraries and tools to scale it, like hapi or superagent.

- Messaging queues. These help when you have long-running worker tasks or mass processing. Tools like RabbitMQ and amqp help put messages in queues.

Service registry and service discovery

Consistency is vital where the large number of microservices have to be successfully orchestrated. Their location can vary in the distributed systems environment and due to some dynamic changes. So it’s important to be able to locate a microservice during the runtime. The procedure includes these steps:

- Service registry, holding special metadata on every service. Microservice instances are registered with the service registry on startup and de-registered on shutdown. Registrator or SkyDNS do the job.

- Service discovery. Services need to discover each other dynamically to get IP address and port detail to communicate with other services in a cluster. There are two types of service discovery mechanisms – client-side discovery and server-side discovery. Kubernetes has it in-built. Some other useful service discovery tools are Consul and Zookeeper.

- Health check. ‘Healthy’ services handle traffic, while ‘unhealthy’ are pruned out. A health checker (e.g., Kubelet, Prometheus) updates the service registry.

- Load balancing uses service registry. The traffic distanced to a particular service should be dynamically load-balanced to all instances providing the particular service. That’s what nginx and Amazon ELB do. Other tools are Kube-proxy and Citrix.

Scheduling

A scheduler runs a containerized apps with regard to available resources, current tasks and requests. So among the scheduler’s jobs are scaling, assigning tasks to different hosts and handling moving workloads in case of failures. Container scheduling tools (Fleet, Marathon (Mesos), Swarm, Kubernetes) cater for the efficient use of a cluster’s resources, tackling user-supplied placement constraints, promptness to prevent a pending state, create ‘fairness’, error resilience and availability.

Wrapping up

To build or not to build your application as microservices? Depends on your ultimate goals, readiness to cope with challenges and understanding of the future. Distributed systems, cloud-native and serverless all, fast pace are just a taste of the things to come. With the complexity of the MSA comes its fault tolerance, flexibility, device agnosticism, and many other benefits. The method is new, the tools are nascent, but it’s there to stay and make many a project shine with new colors. Need more guidance? We’re here for you.