With the revolution of machine learning, a new trend has come to town and it is called face recognition. When Apple announced its FaceID feature, everyone started talking and thinking about implementing face recognition everywhere – business, mobile apps, medicine, retail, and whatnot.

But how can you be sure this technology is what you need without its thorough understanding?

No worries! We’ll tell you today what face recognition is, how it works, and what are the different use cases for this technology. Let’s just say, after reading this article, you’ll become a real Jedi of face recognition.

What is face recognition

Face (or facial) recognition is a biometric identification system that is developed for identifying or verifying a person by comparing and analyzing patterns based on the person’s stored records of facial contours in real time.

How does face recognition work

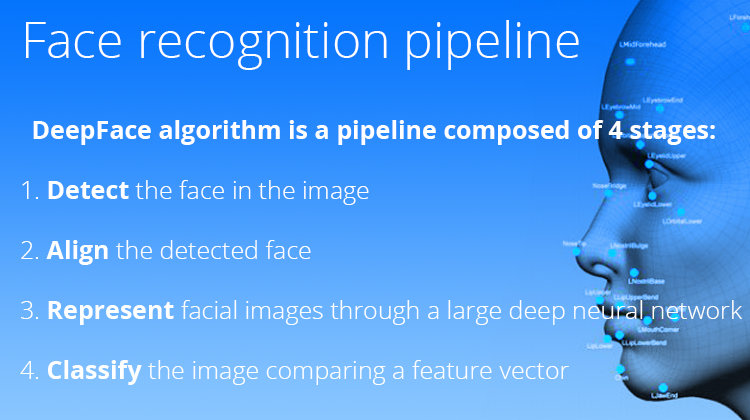

Face recognition system has the input in a form of an image or a video stream and the output in a form of identification or verification of the subject that appear in the image or video. There are several ways face recognition software can be designed, most of them have these three main stages:

- Detection

- Alignment

- Recognition

Detection

It is the computer that determines the location and size of human faces in arbitrary (digital) images. It detects facial features and ignores anything else, such as buildings, trees, and bodies. So the first thing the computer has to do is to identify if there is a face in front of it or not.

Facial detection is something that researchers worked on for a while now. Everything has started in 1973 when Kanade came up with ‘first automated system’ method. Since this discovery, there has been invented several face detection methods.

| Year | Authors | Method |

|---|---|---|

| 1973 | Kanade | First automated system |

| 1987 | Sirovich & Kirby | Principal Component Analysis |

| 1991 | Turk & Pentland | Eigenface |

| 1996 | Etemad & Challapa | Fisherface |

| 2001 | Viola & Jones | AdaBoost+Haar Cascade |

| 2007 | Naruniec & Skarbek | Gabor Jets |

2001 became a breakthrough year for face detection because Viola-Jones object detection framework is the first algorithm that detects faces in real-time.

The algorithm receives a photo as a set of data for the color value of each individual pixel. To find out where is the face in the image or a video, it looks for areas of contrasts, between light and dark parts of the image – like the bridge of the nose is usually lighter than the surrounding area on both sides, the eye sockets are darker than the forehead. By repeatedly scanning through the image data calculating the difference between the grayscale pixel values underneath the white boxes and the black boxes, the program can detect faces.

Alignment

Initial attempts at facial identification tried to mimic this human method. The computer would divide the face into visible landmarks called nodal points, which includes the things like the depth of the eye sockets, the distance between the eyes, and the width of your nose. The differences between these areas would then be used to create a unique code, a person’s own face print. But there was a problem: you can’t really get the same view of your face in two photos. Our faces are in constant flux, they are just not static, like fingerprints.

The solution to this problem is creating a 3D model of a face. So, the alignment is achieved by 3D modeling of the face and using the 3D model to warp the face to a canonical frontal representation.

Recognition

The actual recognition is the classification of the detected, aligned and normalized faces into known identities. Basically, it answers the question ‘Who is it?’. Usually, it consists of many different stages of representation in deep convolutional neural networks. It pushes the received data through multiple classifiers to find out the identity of the person. The final decision can be made by a simple thresholding operation on the dissimilarity measure.

Uses of face recognition technology

Accessing personal devices

Unlocking your phone with your fingerprints is a common trend now. But after Apple Event 2017, the world is interested in the next big thing, and it is unlocking your personal devices with your face. iPhone 8 and iPhone X started the hype of face recognition for mobile apps and phones, and it seems like it is going to stick for a while.

Unlocking your car

Gartner predicts that the number of IoT connected devices will reach 20 billion by 2020. The examples that first come to mind are cars. Today cars can recognize and respond to surrounding environments, we are even used to this thought. Thanks to machine learning, self-driving cars are all around us. But, what if they would actually recognize their owners, that would be pretty amazing.

Targeted advertising

And of course, what technological breakthrough will be complete without marketing teams getting all over it. Mondalies international supermarket in the US is already trying smart shelves. Cameras in the aisles can identify your age and gender, they use it to offer you things that you might like. This is the next level of targeted advertising.

Marketing feedback

What you might not consider is that facial recognition can be used for judging the levels of a person’s engagement, that can be helpful for discovering the best ways to connect with potential and existing customers.

For example, Walmart is also in the race of face recognition technologies. It is going to develop its own FaceTech system to gain insights into customer satisfaction. Probably, one day, this will become a standard procedure for all major retailers.

Securing data

Facial recognition gives us a new way of securing important data. I think everybody remembers at least one spy movie where really important information has been stored in a room that scanned a person’s face. These technologies are not just in fantastic movies now, they are among us.

Mental health diagnosis

Medicinewise, it can be helpful too. Face recognition is doing pretty well with detecting diseases. But what about the health issues that aren’t that easy to diagnose? Mental health problems are one of them. Thanks to face recognition, it is now way easier to track a patient’s expressions and judge the extent of distress and come closer to making an accurate diagnosis.

Also, people that are struggling from face blindness (prosopagnosia) would greatly benefit from facial recognition technology.

And for those of us who forget names, there’s also an app called ‘NameTag’, that takes a snap of a person and finds out their online profile for you.

Security tracking

A face recognition can be used for a good cause like security tracking. This year, Download festival became the first outdoor event in the UK to scan the crowds for known troublemakers. Cameras were placed strategically around the festival, monitoring the 90,000-strong crowd. Shopkeepers are using similar software to create their own database of known shoplifters and alert security when they are entering the store.

Day care

As parents, we all want our children to be safe and sound. And as a day care owners, we want the reputation of a highly trusted place. Face recognition software can verify the identity of individuals picking up the children.

Social robot interactions

The future is here, and social robot-helpers need not only perform required tasks but also recognize its owners and understand people’s emotions for better user experience.

Benefits of face recognition

No more fraud

We are always on a constant lookout for a new way of fraud prevention. PIN codes, passwords, and captures are not enough. With the evolution of artificial intelligence and machine learning, it is becoming much easier to fool security systems. Mastercard is actually looking to see if taking a selfie can be a viable way of authenticating a credit card purchase.

Less money spent

When it comes to business, the first question you’ll ask about face recognition is ‘How much will it cost me?’

Well, face recognition is one of the most inexpensive biometrics in the market.

Easy and fast to use

Face recognition is known for its convenience and social acceptability. It is the only biometric capable of operating without user cooperation. And it works in real time, so what you need to do is look at the camera and in a few seconds, it knows who you are.

Reduce product loss

Facial recognition software can reduce product loss at stores. It can notice notorious shoplifters and notify the security so they could escort them out the store. Also it can solve the problem of employee theft. Employees are less likely to steal when they know there is software that can catch them and notify the shop owners who exactly is stealing.

The downside of face recognition

Privacy loss

There’s an elephant in the room, though. Face recognition is great but every great thing comes at a price. And the price we pay is our privacy. Sure, we want social events to be safer and our shopping experience better. But are we ready to be on a constant watch.

This technology is a very powerful tool and it should never end up in wrong hands unless we want all new creepy kind of stalking to take place.

In fact, Japanese National Institute of Informatics Technology has created privacy glasses which have special lenses to absorb light in order to try and mask the various features from facial recognition software. But that technology might already be redundant. Something called FaceIt Argus can identify you using your skin. The technique is known as Surface Texture Analysis and it works like facial recognition but it actually creates ‘a skinprint’ instead and it is so accurate in distinguishing between identical twins which faceprint is really struggling to do.

What if someone steals my face

Face recognition is not perfect, it still needs a lot of work to become flawless. For example, the facial recognition on Samsung’s Galaxy Note 8 can be fooled with a photo, so the problem of fooling the system needs to be addressed quickly. Because no one wants to end up in the next episode of Game of Thrones. Yes, the show is great, we do not want to get our faces stolen.

Examples of face recognition

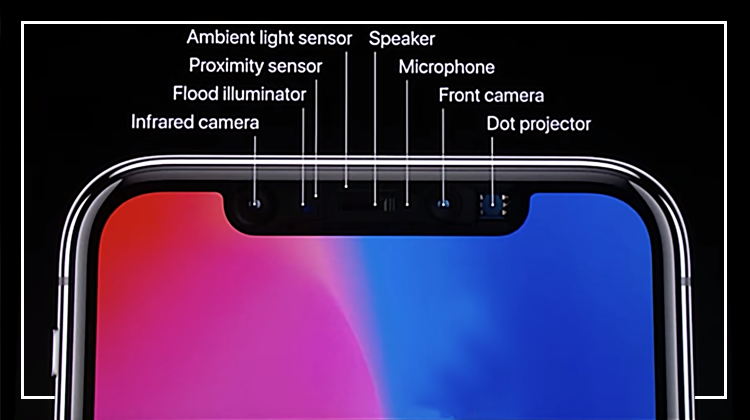

iPhone X’s FaceID feature

iPhone X can recognize its owners. The FaceID feature lets you unlock the phone by just looking at it. And it is possible to the TrueDepth camera system – a little line at the top of the screen. It contains a whole bunch of different sensors that make this technology as accurate as possible:

- Infrared camera

- Flood illuminator

- Front camera

- Dot projector

- Proximity sensor

- Ambient light sensor

And here’s how it works. iPhone X detects your face with a flood illuminator, even in the dark, the IR camera takes the IR image, the dot projector, projects over the 30 000 invisible IR dots, the IR image and the dot pattern get pushed through neural networks to create a mathematical model of your face. And then it checks this model with the one you have set earlier to see if it is a match.

To set up a FaceID, you simply need to follow the instruction your phone gives you. So you slowly move your head in a circle to let faceID remember your face. It works even if you change your hairstyle, put on glasses and a hat.

And last, the statistics, the chances of another person unlocking your phone with their fingerprint is 1:50000, and the chance of another person unlocking your phone with their face is 1:1000000. As you can see from this, it’s a pretty efficient and secure technology.

KFC payments smile system

KFC is introducing a new way to pay for food in China. And it is a SMILE. A new concept store in the city of Hangzhou has introduced a ‘Smile to Pay’ system that is using facial recognition. So how does it work? People have to come close to an ordering kiosk. First, you place your order, click ‘pay’, and pick a table at the restaurant. Then look up at the camera to authenticate your payment, and type in your phone number to seal the deal.

The machine uses a 3D-camera and ‘liveness detection algorithm’. Those technologies will prevent scammers from simply holding up photos of other people. The goal is to attract a younger generation of KFC customers.

‘Smile to pay’ has passed the accuracy test. Heavy makeup, different hairstyle, and even if you combine these factors and add multiple people in a crowded scene it will still identify the person correctly.

Google’s Clip Camera

This camera is unlike any other camera you ever used before. It is made by Google and, like everything Google makes these days, it uses machine learning to do something pretty special. This camera lets the computer decide when to hit the shutter button. You just turn it on, point it at the world and the computer figures out if something interesting is happening and then it takes a picture of it.

Google Clips can recognize your face and your family faces.Here’s how it works. You turn it on and then pair to your phone, and once it’s paired, you can put your phone away if you want to. You twist the lens on the clips to the ‘on’ position and a little LED light starts blinking to let you know that it is on and looking for something to record. The idea is that you’re just going to set it down or clip it to something and let it do its thing. It looks for what Google algorithms think might be interesting and then records a little 7-second clip of that thing. It might be your kids playing or somebody smiling just right. The camera learns over time. With the help of facial recognition, it can recognize your face, your family faces, and even your pets. Eventually, it learns to take photos of some people and ignore other people. Well, yes, this sounds super creepy. But worry not! All of the data is stored only on this camera, fully encrypted, and can only be transferred to your phone that you have paired it with. And all the face recognition only happens on the device too.

So Google Clips has become an extraordinary example of face recognition implementation.

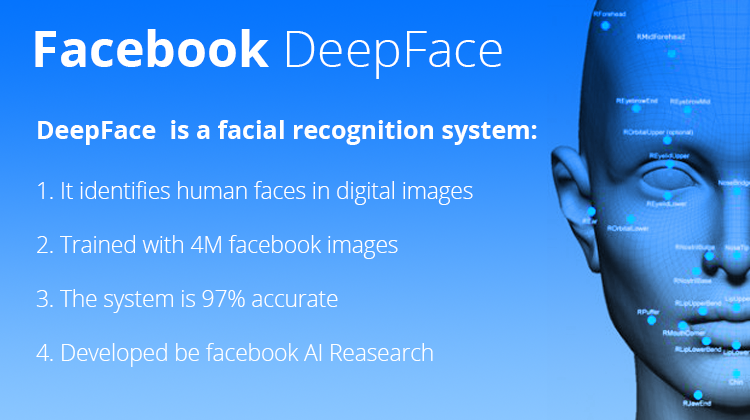

Facebook’s DeepFace

The Facebook research group has created a deep learning facial recognition system called DeepFace. It is able to take a 2D photo of a person and create a 3D model of the face. Now, this allows the face to be rotated, so the pictures taken from different angles or poses can be compared. The aging is no longer a problem either. The ‘faceprint’ system can be created from areas of the face that have a rigid tissue, and bones such as the curves of the eye sockets or the nose, or the chin, things that, apparently, don’t alter much as we age. But the main reason for the heightened accuracy of the DeepFace is down to a computer teaching technique called ‘deep learning’, which uses algorithms to try to work out whether it is on the right track. Each time it correctly or incorrectly matches two faces, it remembers the step it took creating a roadmap, and the more times it repeats the process, the more connections appear on its map, the more accurate it becomes at the task. The idea is for the computer to build a network of connections, like our neural network of interconnected neurons.

Facebook’s neural network has 20 million connections, a number that just keeps increasing with every photo that is uploaded and tagged. The larger the data set, the better the computer can become. The Facebook benefit is that the data needed to train the computer to recognize faces is already on the platform, in the form of a library of 4.4 million labeled faces.

Conclusion

Hope this article has shed some light on the topic of face recognition. Now that you understand how the technology works, it’s your move to come up with ideas how to apply it to your specific business or project. Face recognition is a fast moving train that is going straight to the top of the technology trends, so hurry up and book a ticket.

Want more information on face recognition and machine learning technologies? Check these posts:

it was a good article, I will more thankful if you share the reference with us.